버전

hive 3.1.2

mariadb 10.3.xx

mysql-connector-java 8.0.26

java : jdk 8(1.8.0)

hadoop.3.1.1

java, hadoop은 앞서 적어논 게시글을 따라왔다면 깔려있다.

이제 hive를 올려볼 차례다

그전에 앞서 mariadb 다운로드하기

출처

https://zetawiki.com/wiki/CentOS7_MariaDB_%EC%84%A4%EC%B9%98

<mariadb>

$ mkdir /mariadb_home

$ cd mariadb_home

$ vi /etc/yum.repos.d/MariaDB.repo

[mariadb]

name = MariaDB

baseurl = http://yum.mariadb.org/10.3/centos7-amd64

gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

gpgcheck=1

$ yum install MariaDB -y

$ rpm -qa | grep MariaDBhive 설치

https://dlcdn.apache.org/hive/

버전 맞게 -> bin 링크 따오기

$ mkdir /hive_home

$ cd /hive_home

$ wget https://dlcdn.apache.org/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz편의를 위해 /usr/local에 압축품

$ tar -zxvf apache-hive-3.1.2-bin.tar.gz -C /usr/local/

사용자 환경설정

$ vi ~/.bashrc

###추가###

export HIVE_HOME=/usr/local/apache-hive-3.1.2-bin

PATH="$HOME/.local/bin:$HOME/bin:$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin"

export PATH

###추가###$ hadoop dfs -mkdir /tmp

$ hadoop dfs -mkdir -p /user/hive/warehouse

$ hadoop dfs -chmod g+w /tmp

$ hadoop dfs -chmod -R g+w /user

[mariadb]

$ systemctl start mariadb

$ /usr/bin/mysqladmin -u root password '1234'

$ netstat -anp | grep 3306

$ mysql -u root -p

# 접속확인

$ quit

$ systemctl set-environment MYSQLD_OPTS="--skip-grant-tables"

$ systemctl start mariadb

$ systemctl status mariadb

$ mysql -u root

mariadb -> FLUSH PRIVILEGES;

mariadb -> ALTER USER 'root'@'localhost' IDENTIFIED BY '1234';

mariadb -> FLUSH PRIVILEGES;

$ systemctl stop mariadb

$ systemctl unset-environment MYSQLD_OPTS

$ systemctl start mariadb

$ systemctl status mariadb

mariadb> CREATE DATABASE metastore DEFAULT CHARACTER SET utf8;

mariadb> CREATE USER 'hive'@'localhost' IDENTIFIED BY '1234';

mariadb> GRANT ALL PRIVILEGES ON metastore.* TO 'hive'@'localhost';

mariadb> FLUSH PRIVILEGES;

mariadb> SELECT Host,User FROM mysql.user;

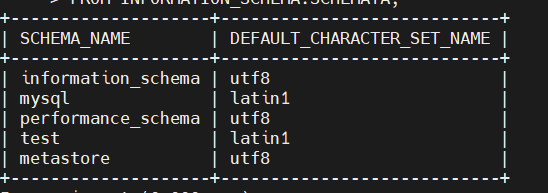

mariadb> SELECT SCHEMA_NAME, DEFAULT_CHARACTER_SET_NAME

-> FROM INFORMATION_SCHEMA.SCHEMATA;

조회되면 exit

<mysql 커넥터 설치하기 !>

1. 버전확인하기

https://dev.mysql.com/doc/connector-j/5.1/en/connector-j-versions.html

2. 다운받기

https://downloads.mysql.com/archives/c-j/

$ mkdir /mysql_cnt_home

$ cd /mysql_cnt_home

8.0.26 -> indpendent -> wget

$ tar -zxvf mysql-connector-java-8.0.26.tar.gz

$ cd mysql-connector-java-8.0.26

$ mv mysql-connector-java-8.0.26.jar /usr/local/apache-hive-3.1.2-bin/lib

$ cd /usr/local/apache-hive-3.1.2-bin/conf

$ cp hive-default.xml.template hive-site.xml

bin/schematool -dbType mysql -initSchema$vim hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>비밀번호</value>

</property>

</configuration>/눌린뒤 name복사해서 붙혀넣기 한 뒤 value바꿔주기

# 오류 수정할것! 3개

1. guava 버전 맞춰주기

rm $HIVE_HOME/lib/guava-19.0.jar

cp $HADOOP_HOME/share/hadoop/hdfs/lib/guava-11.0.2.jar /usr/local/apache-hive-3.1.2-bin/lib2. 특수문자 제거

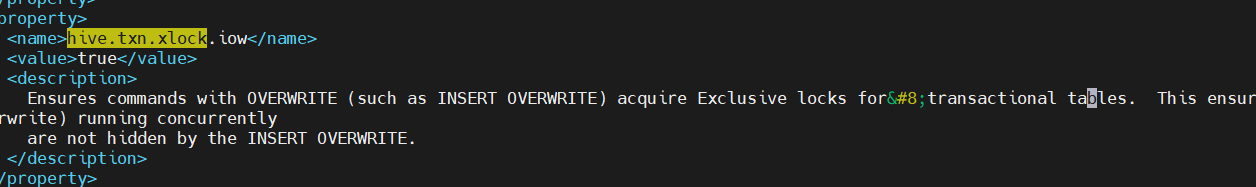

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread “main” java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3215,96,”file:/usr/local/apache-hive-3.1.2-bin/conf/hive-site.xml”]

cd $HIVE_HOME/conf

vim hive-site.xml->hive.txn.xlock.iow 검색후 들어가서 지운다.

3번은

ConnectionDriverName ->value ->com.mysql.cj.jdbc.Driver

$ schematool -dbType mysql -initSchema

// schemaTool compleated 뜨면 성공

$ mysql -u hive -p

mariadb > show databases;

mariadb > use metastore;

mariadb > show tables;

주루룩 나오면 성공## hive 테스트

$ hive

->

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

에러발생시

hive-site.xml파일의

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<usernam

e> is created, with ${hive.scratch.dir.permission}.</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>${system:java.io.tmpdir}/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>${system:java.io.tmpdir}/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.scratch.dir.permission</name>

<value>700</value>

<description>The permission for the user specific scratch directories that get created.</description>

</property>

을 아래와 같이 수정한다.

<name>hive.exec.scratchdir</name>

<value>/tmp/hive-${user.name}</value>

<name>hive.exec.local.scratchdir</name>

<value>/tmp/${user.name}</value>

<name>hive.downloaded.resources.dir</name>

<value>/tmp/${user.name}_resources</value>

<name>hive.scratch.dir.permission</name>

<value>733</value>hive> 테스트

hive> create database test; -- test 데이터베이스 생성

hive> show databases;

hive> create table test. tab1 (

> col1 integer,

> col2 string

> );

hive> insert into table test.tab1

> select 1 as col1, 'ABCDE' as col1;

====> error 발생시

guava version 19.0으로 하둡의 shar/lib 과 hive/lib변경한다guava url

https://mvnrepository.com/artifact/com.google.guava/guava/19.0

출처

https://sparkdia.tistory.com/12